OpenAI just had its annual DevDay. A lot was launched but (expectedly) no new text models. Two of the main announcements were SDKs, and the model releases were simply broader rollouts of existing ones.

To call it a flop would be wrong. These new products are powerful, but unlike early Apple launches, we shouldn’t expect breakthroughs from these scheduled October events. OpenAI, and the broader AI industry, move too fast and is far too dynamic to hold major model releases for set dates.

That being said, lots of interesting things were launched, and their effects will be felt across a wide range of startups (not just the workflow tools like Zapier and n8n).

A history of OpenAI developer days

I went back and looked at what OpenAI launched at DevDay in 2024 and 2023.

2025: Apps in ChatGPT (Apps SDK), AgentKit, Sora 2 in the API, GPT-5 Pro full rollout

2024: Realtime API, Vision Fine-Tuning, Prompt Caching, Model Distillation

2023: GPT-4-Turbo (128K context), Assistants API, GPTs / Custom GPTs, DALL·E 3 API, multimodal (vision & voice), function calling enhancements & structured output

GPT-4-Turbo with 128k context was a massive launch at the time. Notably, it’s the only new model ever announced at a DevDay event.

That should be expected though.

OpenAI isn’t shipping hardware products, and while new models take much longer to build compared to standard software, both the external pressure from competitors and the internal pressure to continually launch better models is so strong that the models will get launched ASAP, whether that’s a month, a week, or even a day before Dev Day.

All that to say, don’t expect major model announcements at these events in the future.

Alright, let's get back on track and take a look at what was actually launched this year.

Launch highlights

Apps in ChatGPT / Apps SDK: Built on the Model Context Protocol (MCP), the new Apps SDK lets developers make their apps discoverable and callable directly inside ChatGPT conversations.

AgentKit: OpenAI’s new toolkit for building, deploying, and optimizing agents. Visual workflow builder, an embeddable chat UI, and built-in evals (including third-party model support!!).

Codex: Now generally available.

Sora 2 in the API: The text-to-video model is now available via the OpenAI API

GPT-5 Pro (API): OpenAI’s smartest and most expensive model ($15/$20) is now generally available. Input tokens are 10x more expensive than GPT-5, for reference.

gpt-realtime-mini: A low-latency variant that’s 70% cheaper than the large model.

gpt-image-1-mini: A smaller image generation model that is 80% less expensive than the large model.

AgentKit

For a deep dive, check out this video here.

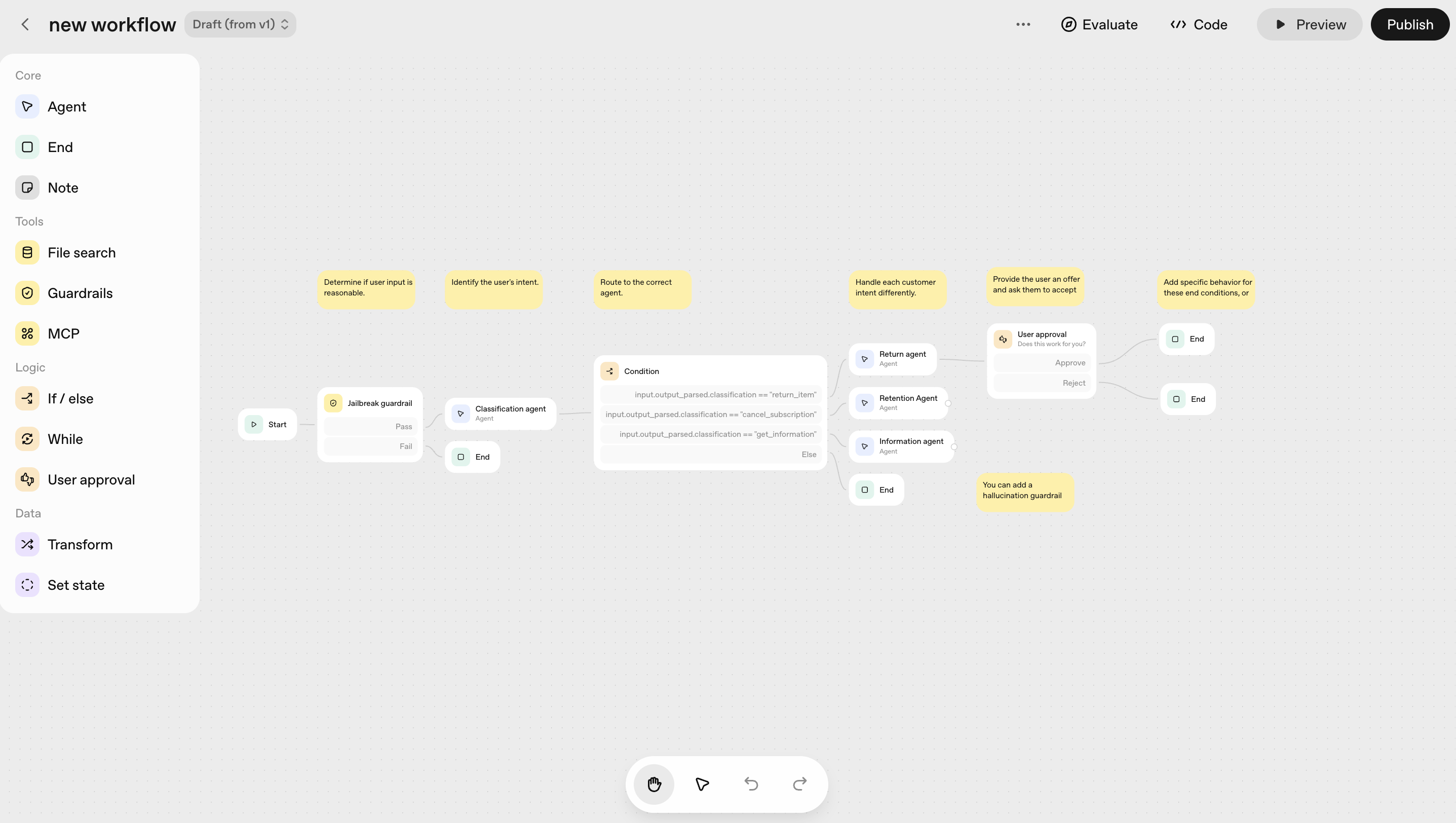

Agentkit is a bundle of tools for developers and teams to build, deploy, and optimize agents. There are a few integral parts:

- Agent builder: Visual, drag-and-drop, canvas for creating/testing agents

- Connector registry: A central place where admins manage MCP tool access across all OpenAI products (including in the agent builder canvas)

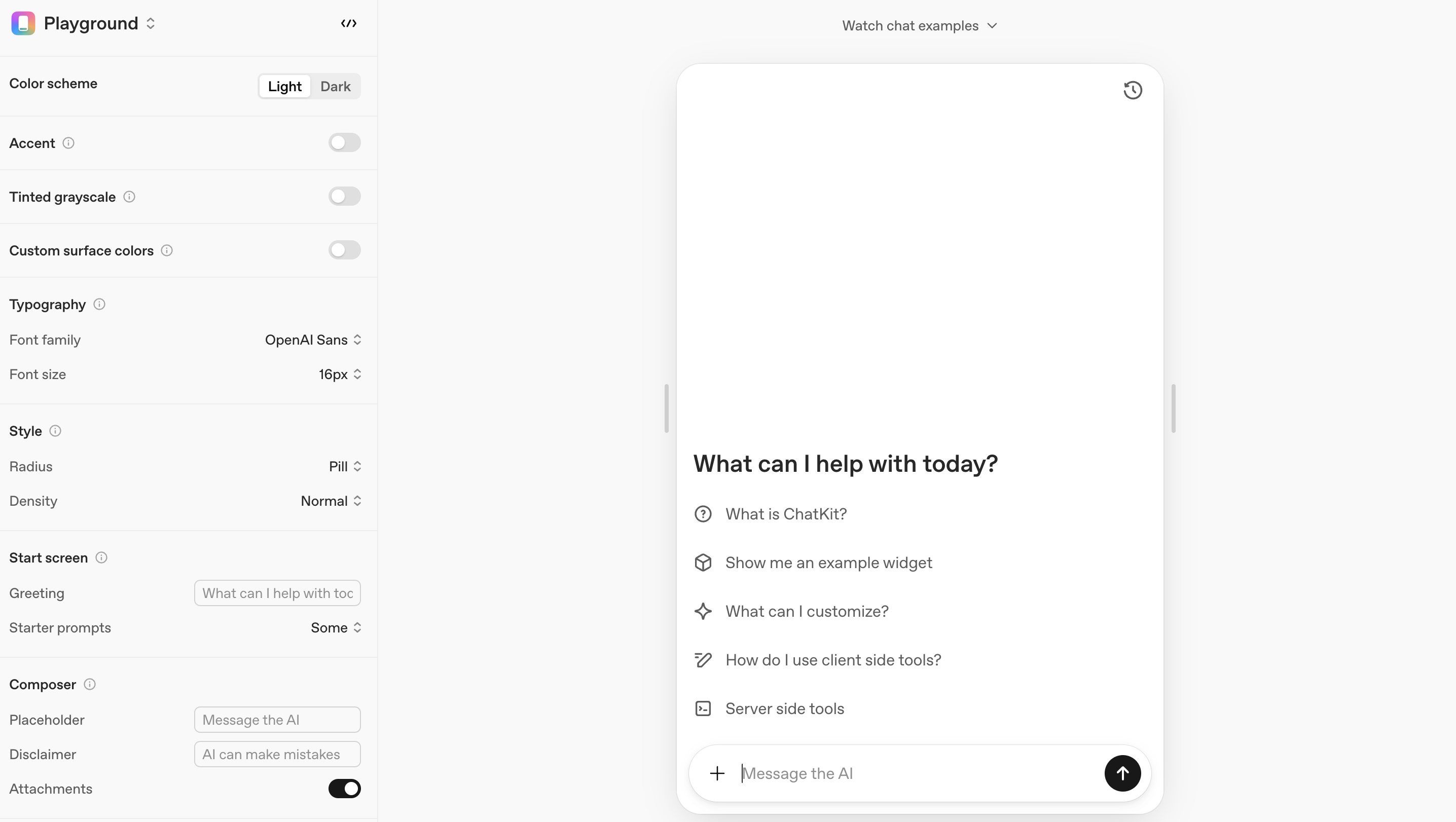

- ChatKit: Built-in UI and logic for embedding chat-based agent experiences in your product/website

- Evals: Bring your own datasets to evaluate agents and ensure consistent performance across different inputs

- Observability and monitoring: Track end-to-end usage of your deployed agents

- Autoptimization: Automatically optimize prompts based on human feedback and eval results

Under the hood, AgentKit is built on top of the Agents SDK, which leverages the Responses API. This foundation enables multi-agent orchestration, tool calling, chaining logic, and more.

Startups affected

You still need a developer to handle integrating the agent and getting it live. Even with ChatKit, it isn’t as simple as dropping a script into your site header. You’ll still need to spin up a server and handle some backend work. It’s not the end of the world, but it’s also far from a “no-code builder for AI agents or workflows".

So did this kill Zapier, n8n, and all the other workflow automation startups? Probably not. In fact, there’s another category of startups that are far more affected, more on that later, let’s focus on Zapier.

Workflow software is complicated because of the bajillion edge cases and integrations.

Zapier’s integration ecosystem is a huge moat, even with the rise of MCP (which has its issues). Direct integrations are much more reliable and are deeply designed for Zapier’s product ecosystem, which makes it extremely user-friendly. Combined with a mature, decade-old platform, this launch probably won’t impact Zapier much. The use cases are surprisingly different, even if the canvas looks similar. Additionally, AgentKit can only start from a user message, there’s no concept of triggers (yet).

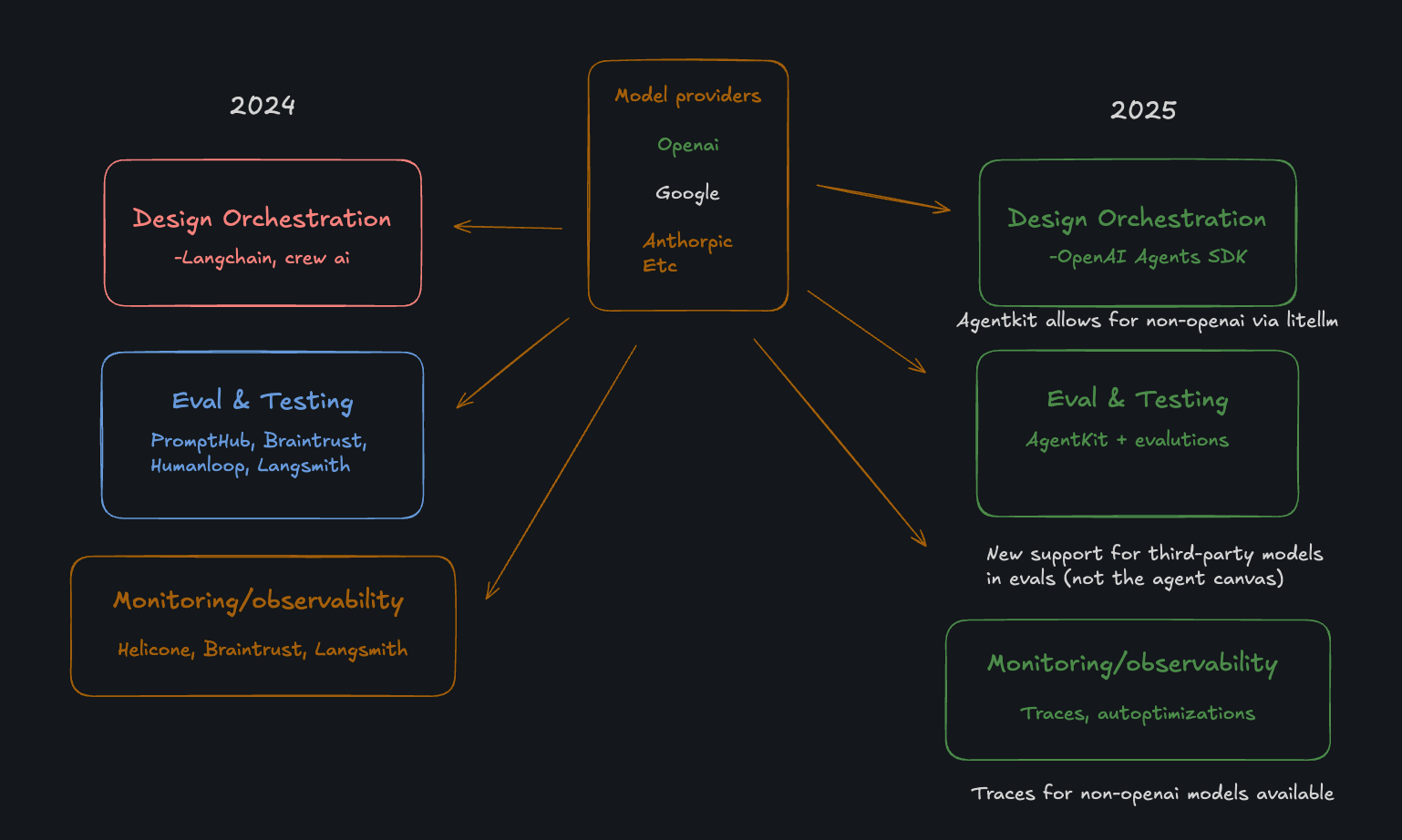

The area I’d be more concerned about is the developer tools space.

As someone in that space, one line really stood out to me:

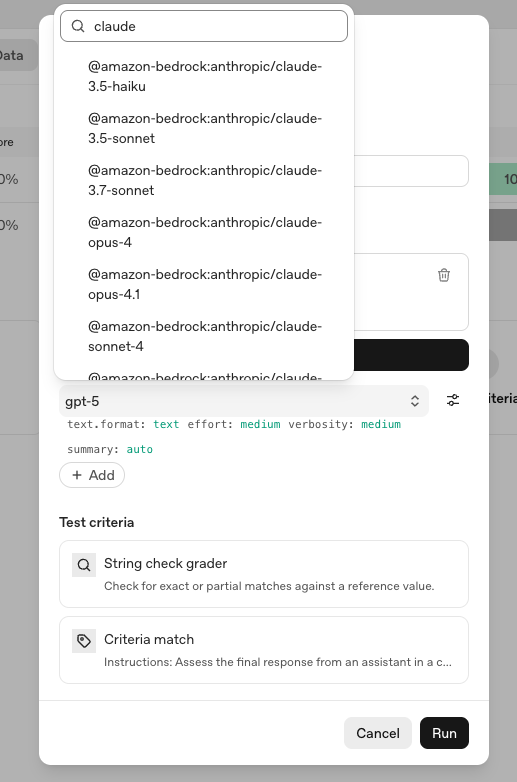

Third-party model support–evaluate models from other providers within the OpenAI Evals platform.

This is an extremely bold move from OpenAI. They’re basically saying, “we don’t care which models you eval against, we’re confident in ours.”

If you are running an AI dev tool company and you were faced with the question that must all ask ourselves “what happens if OpenAI decides to do what you do” you had a credible answer that was something like:

Users want to pick between models. OpenAI’s products and tooling will always be limited to their models. Same goes for Anthropic.

Well, that’s no longer the case!

It’s important to note that third-party model support in OpenAI’ s dev platform is currently limited to only evaluations. You can’t select other models to run specific nodes in your AgentKit workflow builder (for now?). But you can take the same prompts and eval them individually.

Unlike Zapier and n8n, which have had over a decade to build deeply nuanced products, most AI dev tool startups are only two or three years old.

Let’s look at the market.

Just a year ago, even if you wanted to build on a full OpenAI stack, you couldn’t. You needed third-party tools. Two reasons why:

- OpenAI didn’t have the full-stack functionality yet

- Everything they did have was tightly coupled to their own models

The story looks very different today:

- Prompts, evals, tracing, agent builder. A complete workflow loop.

- You can use any model across most functions (Agents SDK, evals, tracing). The few places you can’t (like prompts and the agent canvas) might just be a “for now” limitation.

So what does this mean for all AI dev tool startups? Are they all dead? Not really, but this changes things.

There will always be a segment of the market that wants point solutions. “I don’t want to use the Agents SDK, I only need evals and tracing and AI DEV TOOL COMPANY XYZ has some specific features I want.”

But there’s also a large segment of the market that prefers to have a single, trusted vendor, across the stack ("We’re a Microsoft shop”). No one ever got fired for buying OpenAI.

The moat for AI dev tool companies, as with most startups, will come down to execution and depth.

The ChatGPT App Store

Ah yes, the app store. Here are the highlights:

- Users can now “chat with apps”

- Developers can build apps using the Apps SDK, letting logic and custom UI run inside ChatGPT via MCP

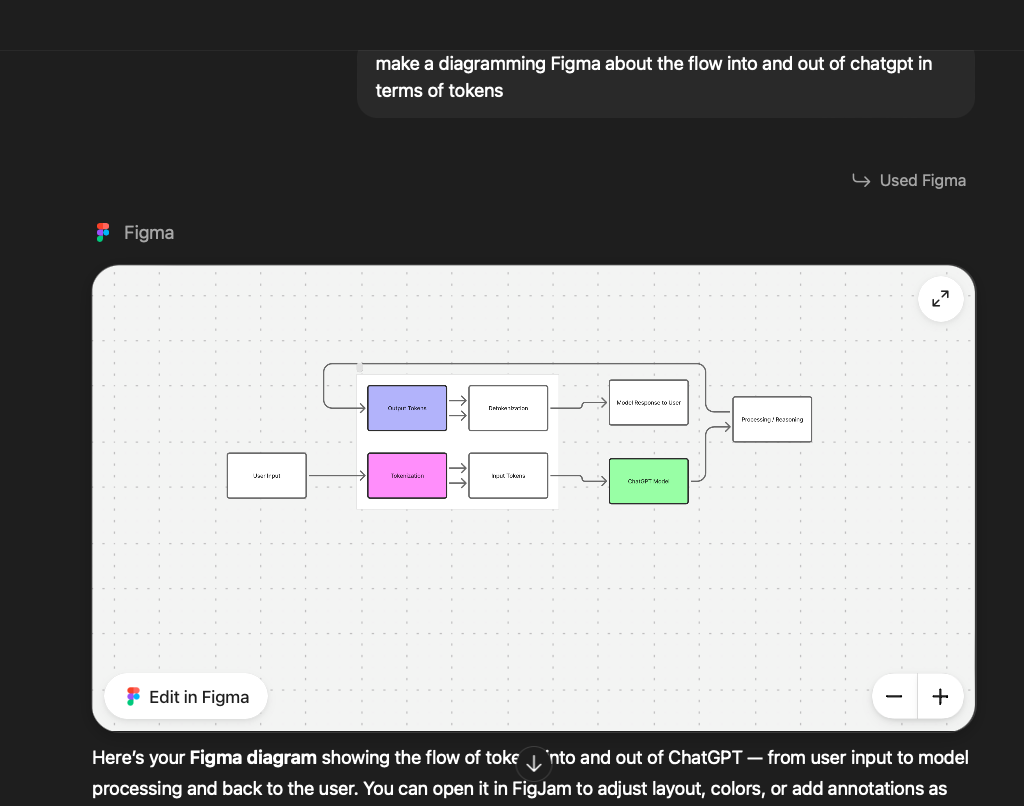

- Currently only supporting a few partners (Spotify, Canva, Coursera, Figma, etc)

- Will open up for public submissions and monetization later this year, with an approval process and directory (app store)

- Apps can access conversation context and memory (!) if needed

A lot to unpack here, but probably best saved for a full-length piece.

With regard to the question of “Will this work, will this be as big or bigger than the Apple app store” a few comparisons come to mind:

- If ChatGPT replaces Google search, then the app store = Chrome extension store? Not super interesting

- If ChatGPT is the operating system for work, the app store = Slack’s app store? Do you use Slack to update CRM records?

- What happened to ChatGPT plugins and public Custom GPTs?

ChatGPT is of course a very different platform compared to the ones that came before it and past performance does not guarantee future results. “Plugins and Custom GPTs weren’t MCP servers, and thus lacked much of the functionality needed to easily build something useful and powerful.

A few interesting tidbits

I already shared my juiciest tidbit (support for third-party models) but two other things stood out:

- gpt-realtime-mini is 70% cheaper than the larger model.

- gpt-image-1-mini is 80% cheaper.

Margins play a massive role for anyone building in AI.

Huge cost drops for frontier models unlock a whole new set of opportunities that simply weren’t economically viable before. Expect to see and hear a lot more AI.

Closing thoughts

After every major launch, there’s always a wave of people claiming that hundreds of startups just got killed. That’s rarely true to the extent people say, but there’s usually some truth to it.

The truth is these launches shake up an industry that’s still extremely early and rapidly evolving. As the ground shifts below, startups need to adapt quickly.