We recently wrote about how AI dev tool startups may be in trouble as OpenAI continues to build out its developer platform (notably including support for third-party models). While this is troublesome for startups, it’s great news for anyone building with these models. On top of better dashboard tooling, these model providers are folding more complexity into the models themselves.

The loop is simple: developers and researchers discover new hacks to make LLMs perform better, and over time, model providers either train those improvements directly into the models (like Chain of Thought) or build first-party tools to address them (like Anthropic’s new memory and context-editing features).

In this post, we’ll break down how the context and prompt engineering stack has evolved over the past few years, and what that means for builders in 2025.

Model advances that are fully baked-in

Generally, model improvements related to making developers’ lives easier fall into two categories: those baked in during training and those launched as tools directly accessible via the API.

The clearest example of something fully baked in is Chain of Thought (CoT) reasoning. The first CoT paper was published in 2022, but the first reasoning model didn’t launch until 2024. What started as a prompt engineering method, telling models to “think step by step”, is now the norm for how all new models “think” to get better outputs. No extra instructions, no orchestration. Reasoning moved from a prompt pattern to a training paradigm.

Now, we’re seeing a similar shift with context management. See below from the Claude 4.5 Haiku system card (s/o Simon Willison for flagging it):

“For Claude Haiku 4.5, we trained the model to be explicitly context-aware, with precise information about how much of the context window has been used. This has two effects: the model learns when and how to wrap up its answer when the limit is approaching, and the model learns to continue reasoning more persistently when the limit is further away. We found this intervention—along with others—to be effective at limiting agentic ‘laziness’ (the phenomenon where models stop working on a problem prematurely, give incomplete answers, or cut corners on tasks).”

Managing context has always been a challenge, with trade-offs between retrieval, compression, and reasoning depth. What Anthropic describes here hints at what the future may look like: LLMs that don’t just know how much context remains, but can eventually manage it themselves.

Tools that extend and control context

Now for the context-engineering-related enhancements that aren’t baked-in but rather are exposed via tools in the API.

Memory

The memory tool from Anthropic (example below) lets Claude store and retrieve information across conversations through external files. More or less like giving Claude a scratchpad to read and write to.

The model chooses when to create, read, update, and delete files that persist across conversations. This allows it to build context over time in a file directory it can access when necessary, rather than keeping everything in the context window.

Without this, you’d need to choose between two bad options: stuff everything into the prompt (and risk hitting context limits: Why Long Context Windows Still Don't Work) or build out your own memory management system.

The memory tool abstracts all of that away. Plus, Claude has been trained to use this tool effectively, so it actually improves reasoning continuity rather than just extending context.

Context Editing

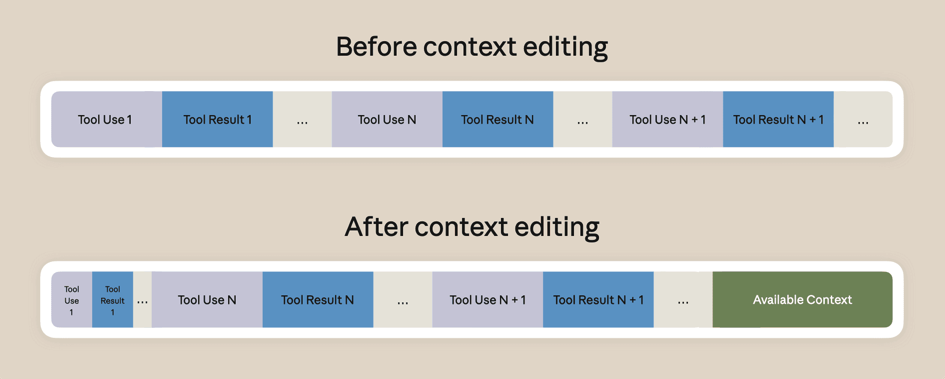

Also from Anthropic, context editing allows you to clear old tool results from earlier in the conversation. For now, it’s limited to only clearing tool results, but you can imagine how this could expand in the future.

When your input grows past a set threshold (say, 100k tokens), the API can automatically clear old tool results and replace them with placeholders, signaling to Claude that the context was trimmed.

You still have to manage other portions of the context, like messages, but the context editing tool is a step in the direction of built-in tooling to help manage context across a whole agentic interaction.

Prompt Caching

Prompt caching straddles the line between built-in and added-on features, since different providers handle it differently. For example, OpenAI manages prompt caching automatically, while Anthropic requires you to add a bit of code to your request to enable caching.

(More on prompt caching here: Prompt Caching with OpenAI, Anthropic, and Google Models).

Token Tracking

The last piece of Anthropic’s new toolset is built-in token awareness. While still light on public detail, this feature tracks available tokens throughout a session, giving both developers and the model a real-time sense of how much context space remains. Although it ties directly into memory and context editing, it can also support other use cases.

Retrieval (OpenAI)

Last year, OpenAI introduced a Retrieval API that lets developers store and retrieve information directly from a managed file store. It's essentially a built-in RAG system.

Instead of maintaining your own vector database and retrieval pipeline, you can now upload documents and let OpenAI handle indexing, search, and context injection automatically.

The blurring line

What’s interesting is that providers are now training their models to use these tools effectively. The boundary between what’s baked in and what’s tooled on is becoming increasingly fuzzy.

While these features are powerful, they still don’t cover everything in the context engineering stack. As they continue to improve, developers will spend less time building infrastructure and more time shaping how models think and interact.

Conclusion

The shift happening right now is simple but impactful: context management, the hardest part of building with LLMs, is steadily moving upstream.

Model providers are baking in reasoning (like Chain of Thought) and training models to understand their own context windows. They’re also shipping tools that handle the rest: memory, retrieval, context editing, caching, token tracking. What used to take entire pipelines now takes a few API parameters.

Context engineering has gone from infrastructure to design.

And as the stack keeps moving upstream, that’s where the real leverage will be.