This guide is for anyone using Anthropic as their model provider.

Integrating PromptHub with Anthropic’s models makes it easy to manage, version, and execute prompts, while decoupling prompt engineering and software engineering.

In this guide, we’ll cover:

- Retrieving prompts from your PromptHub library via the Get a Project’s Head (/head) endpoint and sending them to an Anthropic model

- Injecting dynamic data into your prompts

- Using the Run a Project (/run) endpoint to execute prompts directly through PromptHub

Before we jump in, here are a few resources you may want to open in separate tabs.

- PromptHub: PromptHub documentation

- PromptHub: Postman public collection

- Anthropic: Anthropic documentation

Setup

Here’s what you’ll need to integrate PromptHub with Anthropic’s models for local testing:

- Accounts and credentials:

- A PromptHub account: Sign up here if you don’t already have one.

- PromptHub API key: Generate your API key in your account settings.

- Anthropic API key: Grab your API key from the Anthropic console.

- Tools:

- Python

- Python libraries:

requestsandanthropic

- Project setup in PromptHub:

- Create a project in PromptHub and commit an initial version. Try out the prompt generator to quickly generate a prompt.

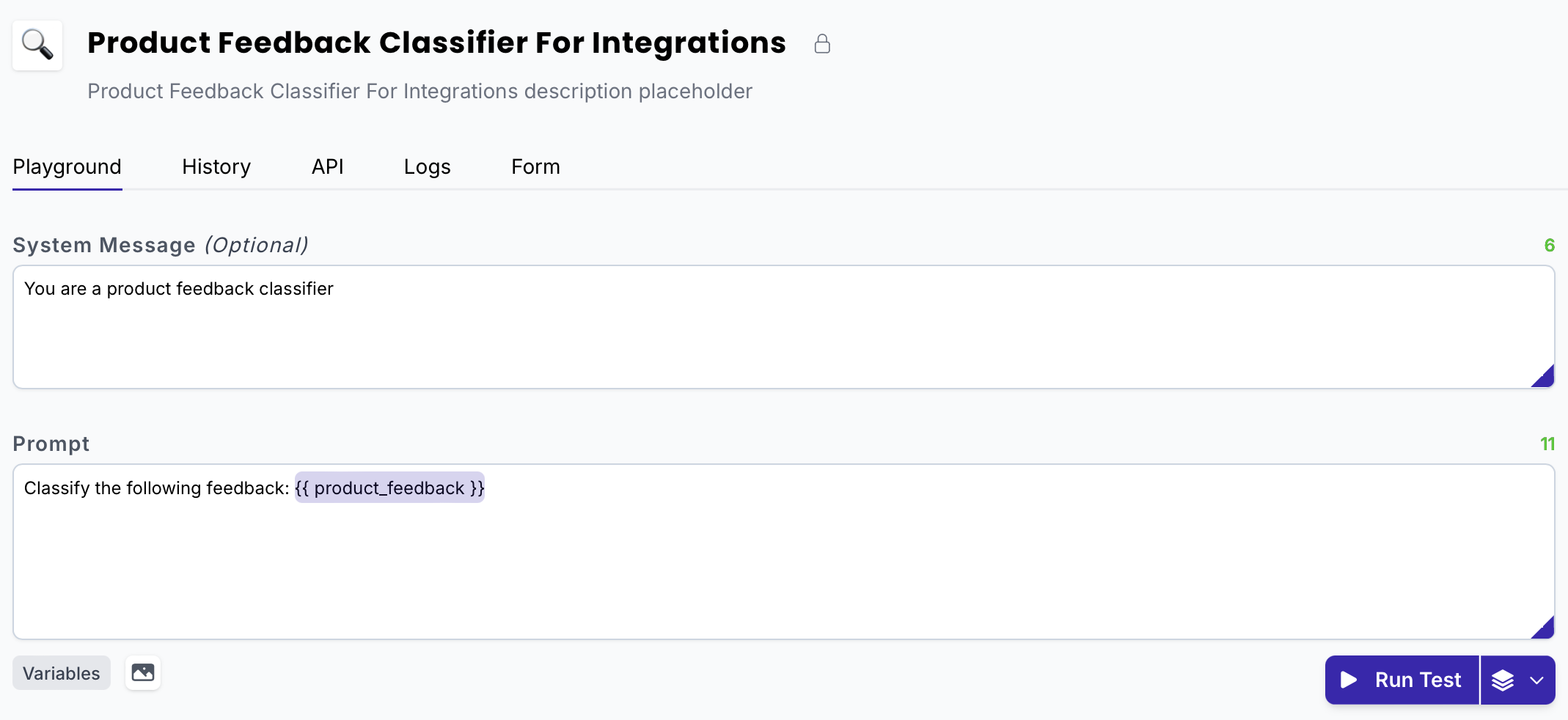

- For this guide, we’ll use the following example prompt:

System message

You are a product feedback classifier

Prompt

Classify the following feedback: {{product_feedback}}

Retrieving a prompt from your PromptHub library

To programmatically retrieve a prompt from your PromptHub library, you’ll need to make a request to our /head endpoint.

The /head endpoint returns the most recent version of your prompt and lets you execute the request to the LLM yourself. This ensures your data doesn’t leave your system.

Making the request

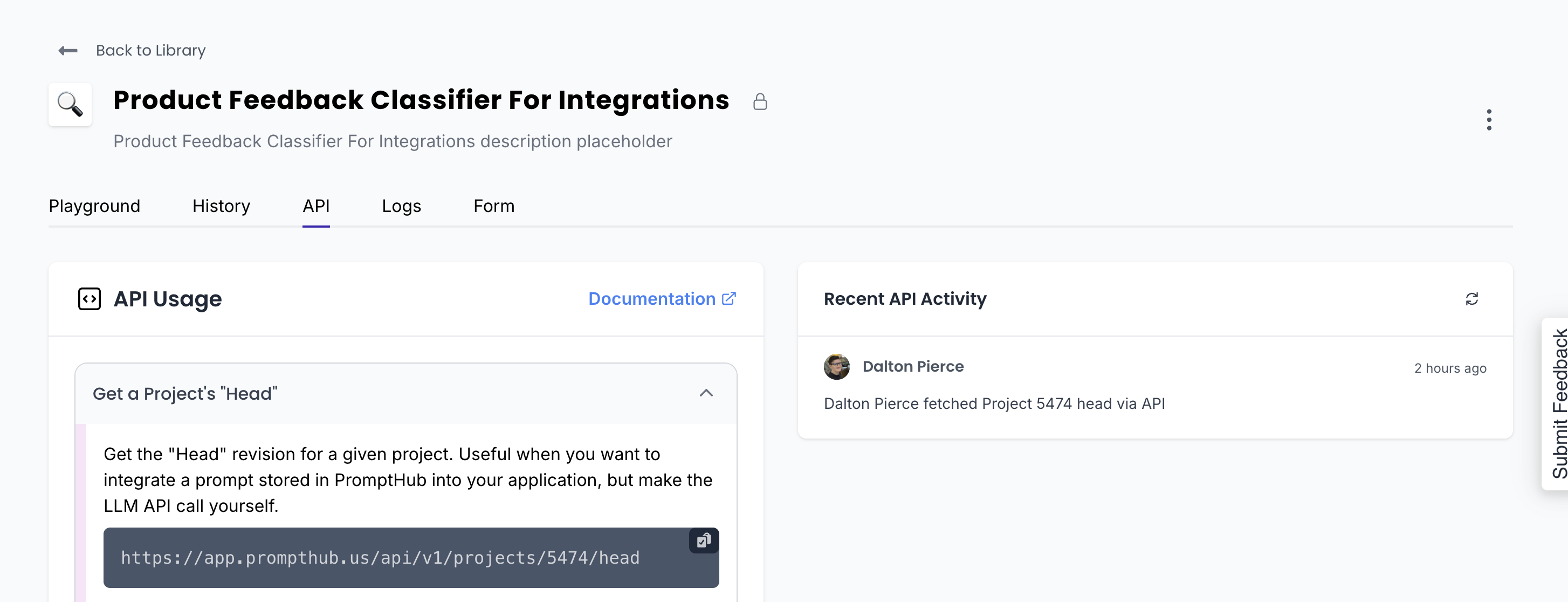

Retrieving a prompt is simple—all you need is the project ID. You can find the project ID in the project URL or under the API tab when viewing a project.

The response from this endpoint includes a few important items:

formatted_request: An object that is structured to be sent to a model ( Claude 3.5 Haiku, Claude 3 Opus, GPT-4o, etc). We format it based on whichever model you're using in PromptHub, so all you need to do is send it your LLM provider. Here are a few of the more important pieces of data in the object:model: The model set when committing a prompt in the PromptHub platformsystem: The top-level field for system instructions, separate from themessagesarray (per Anthropic's request format).messages: The array that has the prompt itself, including placeholders for variables.- Parameters like

max_tokens,temperature, and others.

- Variables: A dictionary of variables and their values set in PromptHub. You’ll need to inject your data into these variables.

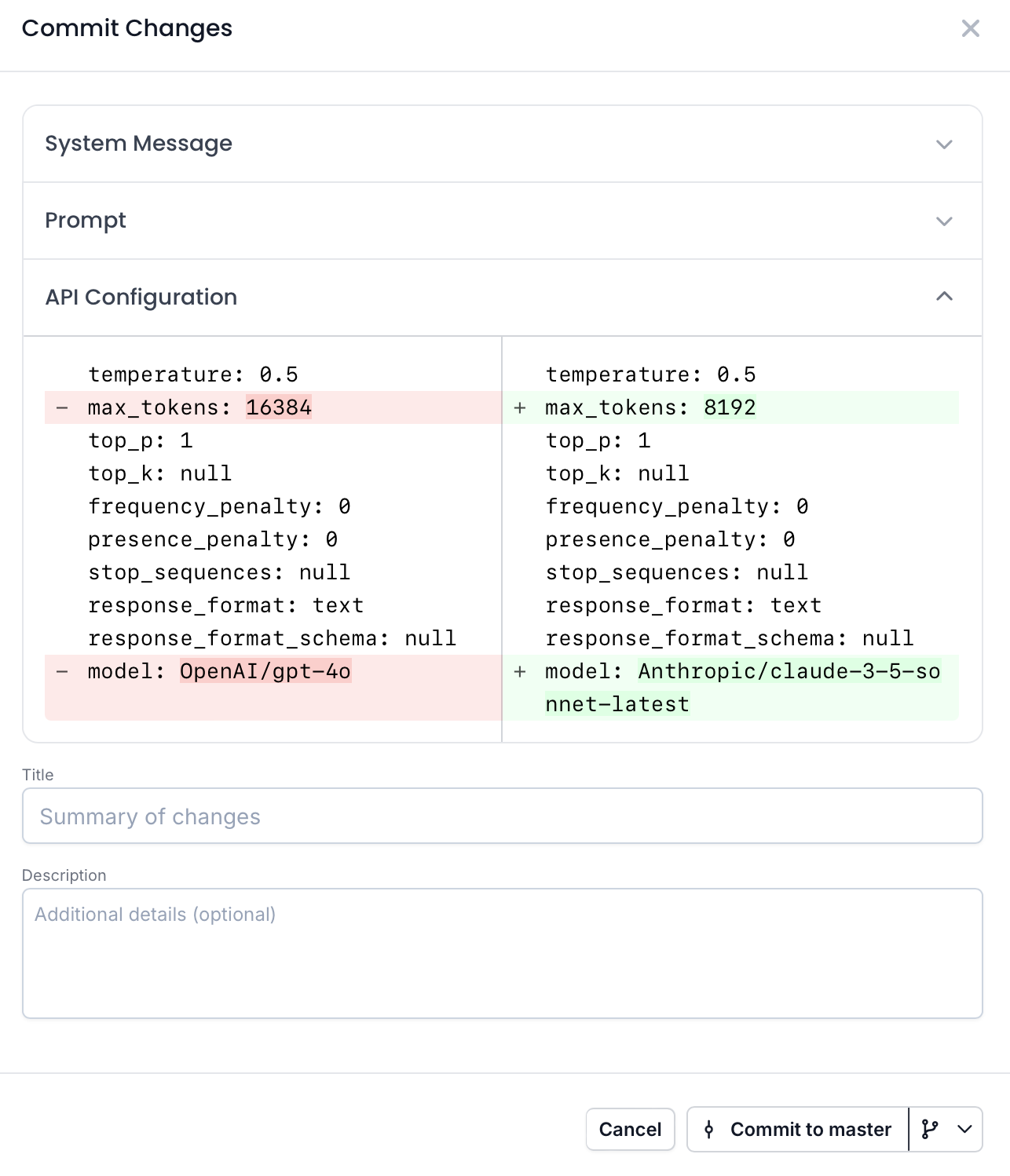

PromptHub versions both your prompt and the model configuration. So any updates made to the model or parameters will automatically reflect in your code.

For example, in the commit modal below, you can see that I am updating the API configuration from GPT-4o (used when creating our OpenAI integration guide) to Sonnet-3.5-latest. This automatically updates the model in the formatted_request.

Here’s an example response from the /head endpoint for our feedback classifier:

{

"data": {

"id": 11944,

"project_id": 5474,

"user_id": 5,

"branch_id": 8488,

"provider": "Anthropic",

"model": "claude-3-5-sonnet-latest",

"prompt": "Classify the following feedback: {{ product_feedback }}",

"system_message": "You are a product feedback classifier",

"formatted_request": {

"model": "claude-3-5-sonnet-latest",

"system": "You are a product feedback classifier",

"messages": [

{

"role": "user",

"content": "Classify the following feedback: {{ product_feedback }}"

}

],

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"stream": false

},

"hash": "d72de39c",

"commit_title": "Updated to Claude 3.5 Sonnet",

"commit_description": null,

"prompt_tokens": 12,

"system_message_tokens": 7,

"created_at": "2025-01-07T19:51:14+00:00",

"variables": {

"system_message": [],

"prompt": {

"product_feedback": "the product is great!"

}

},

"project": {

"id": 5474,

"type": "completion",

"name": "Product Feedback Classifier For Integrations",

"description": "Product Feedback Classifier For Integrations description placeholder",

"groups": []

},

"branch": {

"id": 8488,

"name": "master",

"protected": true

},

"configuration": {

"id": 1240,

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"top_k": null,

"presence_penalty": 0,

"frequency_penalty": 0,

"stop_sequences": null

},

"media": [],

"tools": []

},

"status": "OK",

"code": 200

}

Here’s a focused version that has the data we’ll need to send the request to Anthropic:

{

"data": {

"formatted_request": {

"model": "claude-3-5-sonnet-latest",

"system": "You are a product feedback classifier",

"messages": [

{

"role": "user",

"content": "Classify the following feedback: {{ product_feedback }}"

}

],

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"stream": false

},

"variables": {

"product_feedback": "the product is great!"

}

},

"status": "OK",

"code": 200

}

Now let’s write a script that will:

- Retrieve the prompt

- Extract the

formatted_requestandvariablesto use in the request to Anthropic

import requests

# Replace with your project ID and PromptHub API key

project_id = '5474'

url = f'https://app.prompthub.us/api/v1/projects/{project_id}/head?branch=master'

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer {PROMPTHUB_API_KEY}'

}

# Function to replace variables in the system message and messages array

def replace_variables_in_prompt(formatted_request, variables):

# Replace variables in the system message

if 'system' in formatted_request:

system_message = formatted_request['system']

for var, value in variables.items():

placeholder = f"{{{{ {var} }}}}"

if placeholder in system_message:

system_message = system_message.replace(placeholder, value)

formatted_request['system'] = system_message

# Replace variables in the messages array

for message in formatted_request['messages']:

if 'content' in message:

content = message['content']

for var, value in variables.items():

placeholder = f"{{{{ {var} }}}}"

if placeholder in content:

content = content.replace(placeholder, value)

message['content'] = content

return formatted_request

response = requests.get(url, headers=headers)

if response.status_code == 200:

project_data = response.json()

formatted_request = project_data['data']['formatted_request']

variables = project_data['data']['variables']['prompt']

# Replace variables

variables['product_feedback'] = "The product is excellent!" # Test with a hardcoded value

updated_request = replace_variables_in_prompt(formatted_request, variables)

print("Updated Request:", updated_request)

else:

print(f"Error fetching prompt: {response.status_code}")

Using the branch query parameter

In PromptHub, you can create multiple branches for your prompts.

When retrieving a prompt, you can pass the branch parameter in the API request to fetch the latest prompt (the head) from a specific branch. This allows you to test and deploy prompts separately across different environments.

Here’s how you would retrieve a prompt from a staging branch:

url = f'<https://app.prompthub.us/api/v1/projects/{project_id}/head?branch=staging>'

Here is a 2-min demo video for more information on creating branches and managing prompt commits in PromptHub.

Now that we’ve retrieved our prompt, isolated the formatted_request object and the variables, the next step is to inject data into the variables with data from our application.

Injecting data into variables

As a reminder, here is what the formatted_request object looks like:

{

"data": {

"formatted_request": {

"model": "claude-3-5-sonnet-latest",

"system": "You are a product feedback classifier",

"messages": [

{

"role": "user",

"content": "Classify the following feedback: {{ product_feedback }}"

}

],

"max_tokens": 8192,

"temperature": 0.5,

"top_p": 1,

"stream": false

},

"variables": {

"product_feedback": "the product is great!"

}

},

"status": "OK",

"code": 200

}

We need to replace the variable {{ product_feedback }} with real data.

Here is a script that will look for variables in both the system message and the prompt, replacing them as needed.

Note: Anthropic structures the system message outside the messages array, unlike OpenAI.

def replace_variables_in_prompt(formatted_request, variables):

# Replace variables in the system message

if 'system' in formatted_request:

system_message = formatted_request['system']

for var, value in variables.items():

placeholder = f"{{{{ {var} }}}}" # Format variable as {{ variable_name }}

if placeholder in system_message:

system_message = system_message.replace(placeholder, value)

formatted_request['system'] = system_message

# Replace variables in the messages array

for message in formatted_request['messages']:

if 'content' in message:

content = message['content']

for var, value in variables.items():

placeholder = f"{{{{ {var} }}}}"

if placeholder in content:

content = content.replace(placeholder, value)

message['content'] = content

return formatted_request

The script:

- Checks the system message and messages array for variables using the double curly braces syntax.

- Replaces placeholder variables with their corresponding values

After running this script, the prompt will include your dynamic data and be ready to send to Anthropic!

Sending the request to an Anthropic model

Now that we’ve retrieved the prompt and injected our data into the variables, all we need to do is send the request to Anthropic.

Here’s how you can structure the request to pull the data from the formatted_request object.

import requests

import json

# Anthropic API endpoint

anthropic_url = "https://api.anthropic.com/v1/messages"

# Replace this with your Anthropic API key

anthropic_api_key = "your_anthropic_api_key"

# Function to send the request to Anthropic's API

def send_to_anthropic(formatted_request):

headers = {

"x-api-key": anthropic_api_key,

"anthropic-version": "2023-06-01",

"Content-Type": "application/json"

}

# Prepare the payload

payload = {

"model": formatted_request["model"],

"max_tokens": formatted_request["max_tokens"],

"temperature": formatted_request["temperature"],

"top_p": formatted_request["top_p"],

"system": formatted_request["system"],

"messages": formatted_request["messages"]

}

# Send the request

response = requests.post(anthropic_url, headers=headers, data=json.dumps(payload))

# Handle the response

if response.status_code == 200:

response_data = response.json()

print("Anthropic Response:", response_data)

return response_data

else:

print(f"Error: {response.status_code}, {response.text}")

return None

Note that we are pulling the model and parameters from the formatted_request object as well as the system message and messages array.

Bringing it all together

Below is a script that combines all three steps: retrieving the prompt, replacing variables, and calling Anthropic.

import requests

import json

# Constants

PROMPTHUB_API_KEY = "your_prompthub_api_key"

ANTHROPIC_API_KEY = "your_anthropic_api_key"

PROJECT_ID = "5474" #replace with your project ID

PROMPTHUB_URL = f"https://app.prompthub.us/api/v1/projects/{PROJECT_ID}/head?branch=master"

ANTHROPIC_URL = "https://api.anthropic.com/v1/messages"

# Step 1: Retrieve the prompt from PromptHub

def retrieve_prompt():

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {PROMPTHUB_API_KEY}"

}

response = requests.get(PROMPTHUB_URL, headers=headers)

if response.status_code == 200:

project_data = response.json()

formatted_request = project_data['data']['formatted_request']

variables = project_data['data']['variables']['prompt']

return formatted_request, variables

else:

print(f"Error fetching prompt: {response.status_code}, {response.text}")

return None, None

# Step 2: Replace variables in the formatted request

def replace_variables(formatted_request, variables):

# Hard-coded test value for the variable

variables["product_feedback"] = "The product is not good"

# Replace variables in system message

if 'system' in formatted_request:

system_message = formatted_request['system']

for var, value in variables.items():

placeholder = f"{{{{ {var} }}}}"

if placeholder in system_message:

system_message = system_message.replace(placeholder, value)

formatted_request['system'] = system_message

# Replace variables in messages array

for message in formatted_request['messages']:

if 'content' in message:

content = message['content']

for var, value in variables.items():

placeholder = f"{{{{ {var} }}}}"

if placeholder in content:

content = content.replace(placeholder, value)

message['content'] = content

return formatted_request

# Step 3: Send the request to Anthropic's API

def send_to_anthropic(formatted_request):

headers = {

"x-api-key": ANTHROPIC_API_KEY,

"anthropic-version": "2023-06-01",

"Content-Type": "application/json"

}

payload = {

"model": formatted_request["model"],

"max_tokens": formatted_request["max_tokens"],

"temperature": formatted_request["temperature"],

"top_p": formatted_request["top_p"],

"system": formatted_request["system"],

"messages": formatted_request["messages"]

}

response = requests.post(ANTHROPIC_URL, headers=headers, data=json.dumps(payload))

if response.status_code == 200:

response_data = response.json()

print("Anthropic Response:", response_data)

else:

print(f"Error: {response.status_code}, {response.text}")

# Main Function

if __name__ == "__main__":

formatted_request, variables = retrieve_prompt()

if formatted_request and variables:

formatted_request = replace_variables(formatted_request, variables)

send_to_anthropic(formatted_request)

else:

print("Failed to retrieve and prepare the prompt.")

Using the /head endpoint to retrieve prompts offers the following benefits:

- Implement once, update automatically: Set up the integration once, and updates to prompts in PromptHub instantly reflect in your app

- Separate prompts from code: Make changes to prompts, models, or parameters without requiring a code deployment.

- Version control for prompts: Always fetch the latest committed version of a prompt, ensuring consistency across environments.

- Tailored for different environments: Use the

branchparameter to test prompts in staging before deploying them to production. - Maintain data privacy:Sensitive data stays secure as PromptHub doesn’t log anything during retrieval

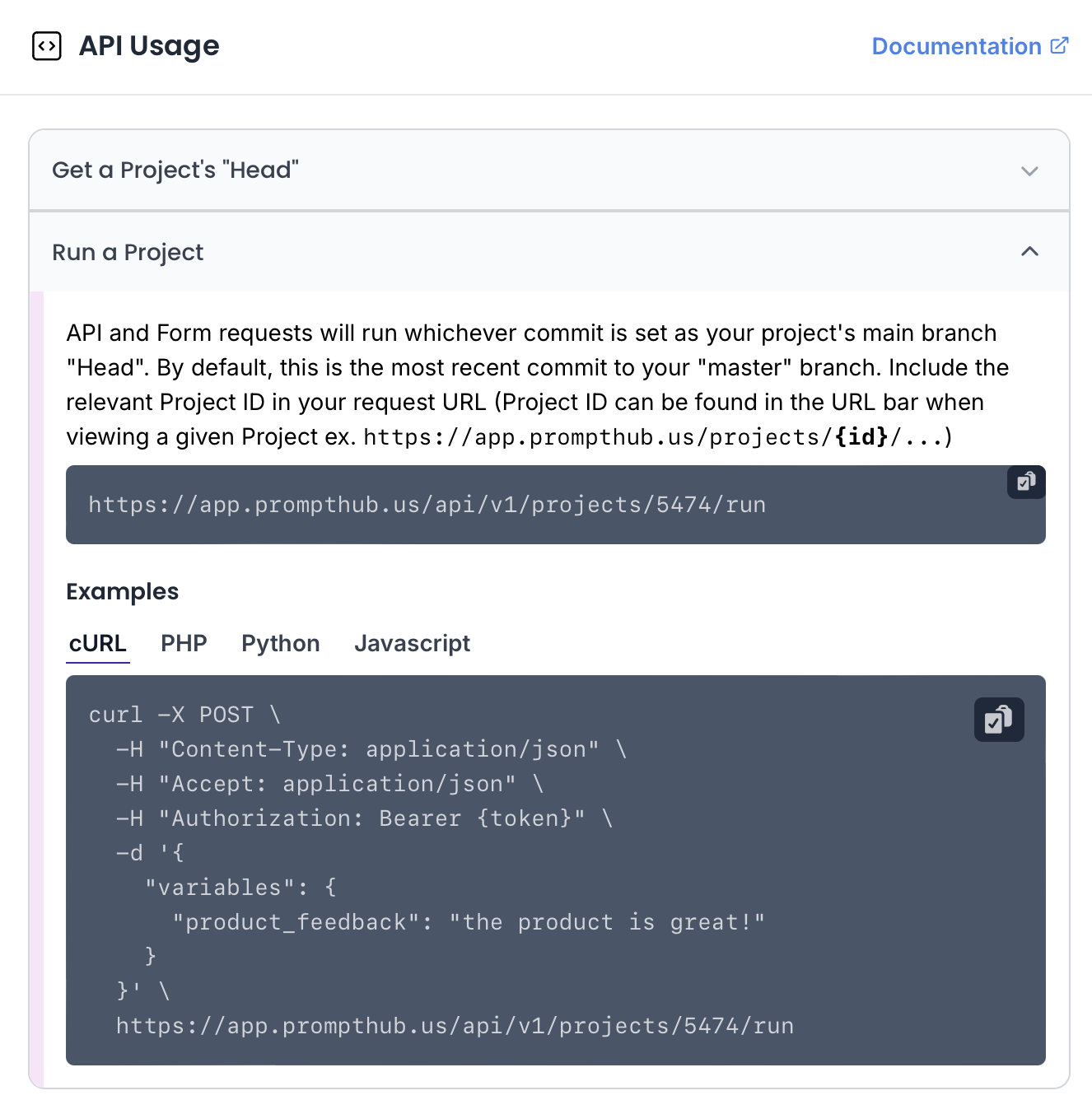

Executing a prompt using the /run Endpoint

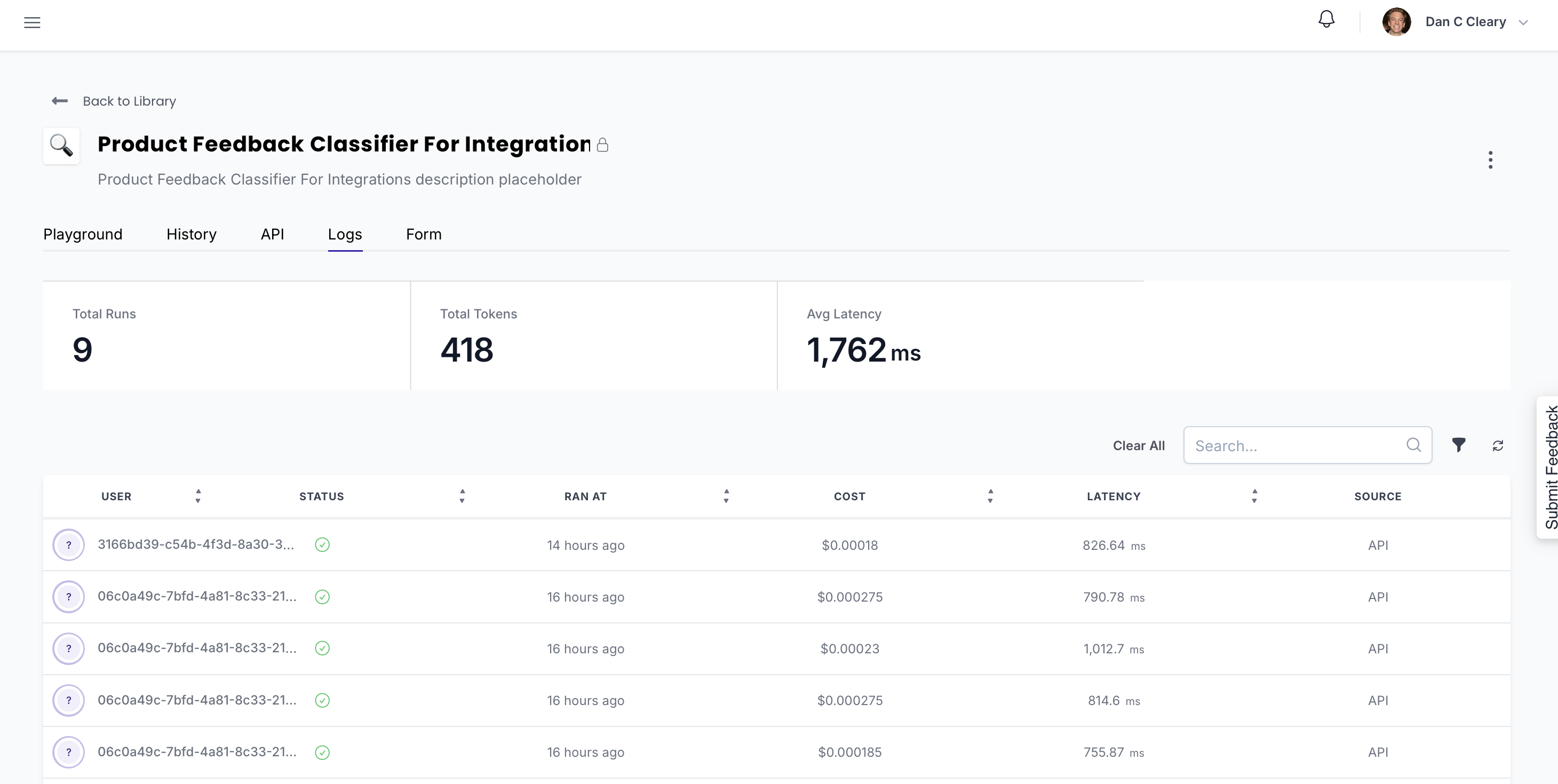

Another way to interact with your prompts in PromptHub is through the /run endpoint. It lets you execute a prompt, pass variables at runtime, and get the LLM's output in one request.

Requests will be logged under the 'Logs' tab, giving visibility into prompt usage and performance.

Making the request

To use the /run endpoint, all you need is the project ID. You can find the project ID in the URL when viewing your project or under the API tab.

Request Structure

URL:

https://app.prompthub.us/api/v1/projects/{project_id}/run

Method:

POST

Headers:

Content-Type: application/jsonAuthorization: Bearer {PROMPTHUB_API_KEY}

Request body: Provide variable values to dynamically update your prompt

{

"variables": {

"product_feedback": "The product is not so great"

}

}

Request parameters:

If no branch or hash parameters are provided, the endpoint defaults to the latest commit (head) on the master or mainbranch.

branch(optional): Specifies the branch from which to run the project. Defaults to the main branch if not provided- Example:

?branch=staging

- Example:

hash(optional): Targets a specific commit within a branch. Defaults to the latest commit from the specified branch if not provided- Example:

?hash=c97081db

- Example:

You can view and manage branches, along with the commit hashes, in the History tab.

Example Python script

Here’s a script to run a project using the /run endpoint and print the LLM’s output:

import requests

import json

# Constants

PROMPTHUB_API_KEY = "your_prompthub_api_key"

PROJECT_ID = "5474"

PROMPTHUB_RUN_URL = f"https://app.prompthub.us/api/v1/projects/{PROJECT_ID}/run"

# Request payload with variable

payload = {

"variables": {

"product_feedback": "The product is awesome"

}

}

# Headers

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {PROMPTHUB_API_KEY}"

}

# Making the request

response = requests.post(PROMPTHUB_RUN_URL, headers=headers, data=json.dumps(payload))

# Handling the response

if response.status_code == 200:

response_data = response.json()

print("LLM Output:", response_data['data']['text'])

else:

print(f"Error: {response.status_code}, {response.text}")

Example response

The text field will contain the response from the model.

{

"data": {

"id": 57264,

"project_id": 5474,

"transaction_id": 60960,

"previous_output_id": null,

"provider": "Anthropic",

"model": "claude-3-5-sonnet-20241022",

"prompt_tokens": 24,

"completion_tokens": 43,

"total_tokens": 67,

"finish_reason": "end_turn",

"text": "Positive feedback",

"cost": 0.000717,

"latency": 1400.11,

"network_time": 0,

"total_time": 1400.11,

"project": {

"id": 5474,

"type": "completion",

"name": "Product Feedback Classifier For Integrations",

"description": "Product Feedback Classifier For Integrations description placeholder",

"groups": []

}

},

"status": "OK",

"code": 200

}

When to Use the /run Endpoint

- Seamless integration: Simplifies the workflow by handling both prompt retrieval and execution in a single API call

- Flexible model and provider updates: Swap models or providers easily by committing changes in PromptHub—no need for code changes.

- Built-in monitoring and insights: Gain valuable insights into prompt usage with detailed logs in the PromptHub dashboard

Wrapping up

Integrating PromptHub with Anthropic’s API simplifies managing, testing, and deploying prompts with Anthropic’s models. Whether using the /head endpoint for retrieval or the /run endpoint for direct execution, PromptHub streamlines prompt engineering with version control, dynamic variable replacement, and built-in monitoring, enabling fast, collaborative iterations for consistent, high-quality model outputs.